DeepSeek vs OpenAI vs Gemini: Best LLM for AI Apps

Compare DeepSeek, OpenAI, and Gemini LLMs. Find the most suitable large language model for your AI application needs.

Choosing the right Large Language Model (LLM) for your AI app or wrapper can significantly impact performance, cost, and deployment ease. This guide compares DeepSeek, OpenAI (including GPT-4 and o3), Gemini, Grok, Qwen, and Meta's Llama to help you make an informed decision based on your specific needs as of March 2025.

Pro Tip:

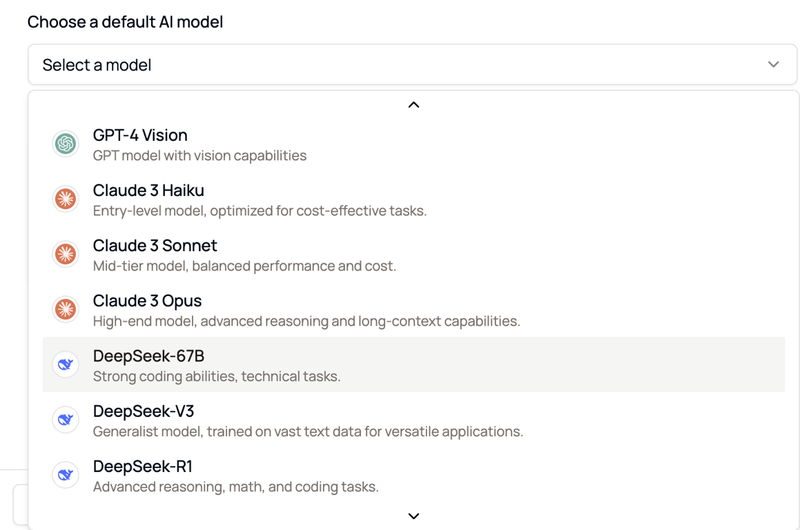

If you are confused between which LLM to use to build your AI Wrapper, signup on BuildThatIdea and instantly start building AI Apps using any of the top LLMs, compare results, and make a data driven decision. On BuildThatIdea, you can get access to all popular and widely used LLMs in single interface.

AI Model Overview

Each model has unique strengths:

- DeepSeek R1: An open-source model from a Chinese startup, known for efficiency in coding and math tasks.

- OpenAI Models: Proprietary models like GPT-4 and o3, versatile for various applications, accessed via APIs.

- Gemini: Google's models, such as Gemini 2.0 Pro and Flash, excel in large context processing, ideal for document handling.

- Grok: Built by xAI, designed for helpful, truthful answers with real-time web search, including Grok 3 and its reasoning variant.

- Qwen: Alibaba's models like QwQ-32B, strong in technical domains, open-source for flexibility.

- Meta Llama: Llama 3.3 70B from Meta, cost-effective and open-source, widely used for general tasks.

Comparison and Use Cases of AI Models

Below is a detailed comparison based on key metrics, with recommendations for specific use cases:

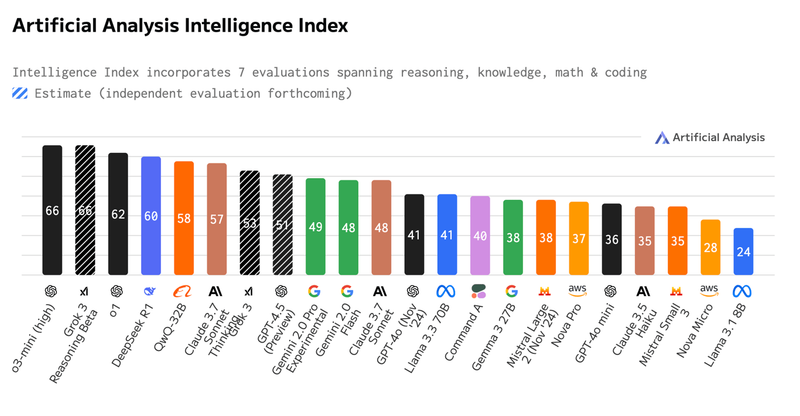

ModelIntelligenceOutput Speed (t/s)Price ($/M tokens)Context WindowCoding IndexMath IndexMMLU-Pro (%)GPQA Diamond (%)DeepSeek R162286128k49858471Gemini 2.0 Pro Exp49116Not specified2.00M38728071Gemini 2.0 Flash4125526.31.00M36647666Grok 3 Reasoning Beta66Not specifiedNot specified1.00MNot specifiedNot specifiedNot specified80Grok 348Not specifiedNot specified1.00MNot specifiedNot specifiedNot specified77QwQ-32B60Not specified93.8200k49927862Meta Llama 3.3 70B41831.8200k33457150

- Customer Service: Gemini 2.0 Pro Experimental (2.00M context window) and Flash (1.00M) are ideal for handling long conversations, while DeepSeek R1 (128k) is cost-effective.

- Coding Assistance: DeepSeek R1 (Coding Index: 49, HumanEval: 98%) and QwQ-32B (Coding Index: 49) are top for code generation.

- Research and Math: QwQ-32B (Math Index: 92, MMLU-Pro: 78%) and DeepSeek R1 (Math Index: 85, MMLU-Pro: 84%) excel.

- Content Generation: Grok 3 Reasoning Beta (Intelligence: 66) and DeepSeek R1 (62) offer strong capabilities.

- Real-Time Information: Grok stands out with real-time web search, perfect for up-to-date data needs.

Comprehensive Analysis of LLMs for Building AI Apps

The AI landscape is rapidly evolving, with Large Language Models (LLMs) playing a pivotal role in developing AI applications and wrappers. This analysis compares DeepSeek, OpenAI (including GPT-4 and o3), Gemini, Grok, Qwen, and Meta's Llama to assist developers in selecting the most suitable model for their needs. The comparison is based on recent benchmarks and insights, ensuring relevance to current technological advancements.

Each model has distinct origins and features:

- DeepSeek R1: Developed by DeepSeek, a Chinese AI company founded in 2023, DeepSeek R1 is an open-source model trained on 2 trillion tokens, excelling in coding (HumanEval Pass@1: 73.78) and math (GSM8K 0-shot: 84.1). Its Mixture-of-Experts system activates only 37 billion of its 67 billion parameters, reducing costs (DeepSeek Wikipedia).

- OpenAI Models: OpenAI's models, such as GPT-4 and o3, are proprietary, known for versatility across tasks. The o3-mini, for instance, reduced errors by 39% compared to o1-Mini, with a 56% tester preference.

- Gemini: Google's Gemini models, including 2.0 Pro and Flash, offer large context windows (up to 2M tokens), ideal for document processing. Gemini 2 Flash is noted for cost-efficiency at $0.7 per million tokens in customer service contexts.

- Grok: Built by xAI, Grok is designed for helpful, truthful responses with real-time web search capabilities. Grok 3 and its Reasoning Beta version are highlighted for high intelligence scores, with Grok 3 Reasoning Beta at 66 on the Artificial Analysis Intelligence Index (Artificial Analysis Models).

- Qwen: Alibaba's Qwen models, such as QwQ-32B, are open-source, performing strongly in math (Math Index: 92) and coding (Coding Index: 49), trained on over 20 trillion tokens.

- Meta Llama: Meta's Llama 3.3 70B is open-source, cost-effective at $1.8 per million tokens, and widely adopted for general tasks, with a context window of 200k tokens

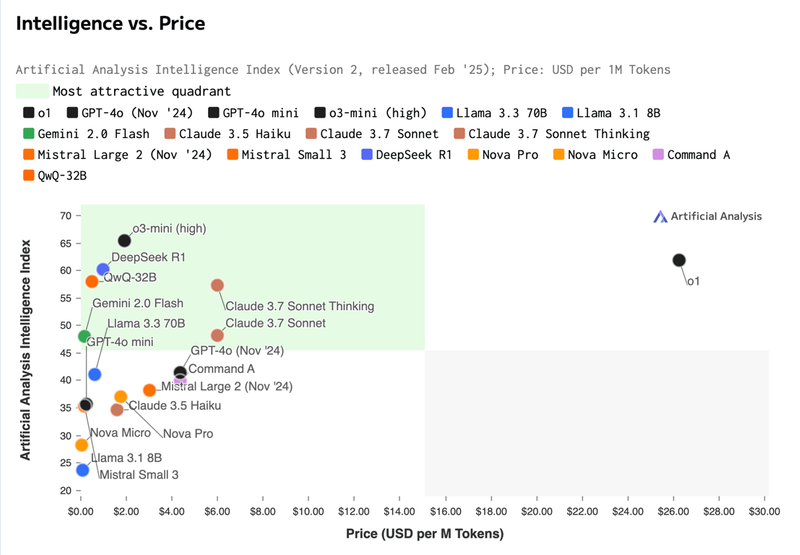

Cost Comparison of LLMs

- Meta Llama 3.3 70B is the cheapest at $1.8 per million tokens, ideal for budget-conscious users.

- DeepSeek R1 at $6 is competitive, while QwQ-32B at $93.8 is the most expensive, reflecting its high performance in specific tasks.

- Gemini 2.0 Flash is priced at $26.3, with Flash versions offering cost savings at $0.7 in some contexts.

Context Window Size is Crucial Factor for Long-Form Tasks:

- Gemini 2.0 Pro Experimental offers 2.00M tokens, the largest, followed by Gemini 2.0 Flash at 1.00M and Grok versions at 1.00M.

- DeepSeek R1 has 128k, sufficient for many applications but less than Gemini for extensive document processing.

Task-Specific Performance of Different LLMs

- Coding: DeepSeek R1 and QwQ-32B tie at a Coding Index of 49, with DeepSeek R1 excelling in HumanEval at 98%, making it a top choice for coding assistance (Artificial Analysis Models).

- Math: QwQ-32B leads with a Math Index of 92, followed by DeepSeek R1 at 85, both outperforming others like Gemini 2.0 Pro Experimental at 72.

- MMLU-Pro: DeepSeek R1 scores 84%, ahead of Gemini 2.0 Pro Experimental at 80% and Meta Llama at 71%, indicating strong academic performance.

- GPQA Diamond: Grok 3 Reasoning Beta leads at 80%, with DeepSeek R1 at 71%, showing reasoning prowess.

LLMs Recommendations as Per Use Cases

Here are tailored recommendations on which AI Model to use for your specific use case:

- Customer Service: Gemini 2.0 Pro Experimental and Flash are ideal due to large context windows (2.00M and 1.00M), with Gemini 2 Flash being cost-effective at $0.7 per million tokens in some uses, as noted in customer service evaluations. DeepSeek R1 is a viable alternative at 128k context window and lower cost.

- Coding Assistance: DeepSeek R1 (HumanEval: 98%) and QwQ-32B (Coding Index: 49) are top, with DeepSeek's open-source nature adding flexibility for on-premise deployment.

- Research and Math: QwQ-32B (Math Index: 92) and DeepSeek R1 (Math Index: 85) are best, with Qwen's high MMLU-Pro score of 78% supporting academic tasks.

- Content Generation: Grok 3 Reasoning Beta (Intelligence: 66) and DeepSeek R1 (62) offer strong capabilities, with Grok's real-time web search adding value for dynamic content (Artificial Analysis Models).

- Real-Time Information Access: Grok stands out with real-time web search, making it ideal for applications needing up-to-date data, as highlighted in its design focus.

Cost and Deployment Considerations

- Cost-Effectiveness: Meta Llama 3.3 70B at $1.8 per million tokens is the most budget-friendly, suitable for startups. DeepSeek R1 at $6 offers a middle ground, while QwQ-32B at $93.8 is for high-performance needs.

- Accessibility: Open-source models like DeepSeek R1, Qwen, and Meta Llama allow on-premise deployment, reducing reliance on cloud APIs. Proprietary models like OpenAI and Gemini require API access, with robust platforms for integration.

- Deployment Flexibility: DeepSeek's open-source nature, as seen in its GitHub repository, enables customization, while Gemini's large context windows are better suited for cloud-based applications (DeepSeek GitHub).

Future Outlook and Controversies

There is ongoing debate around open-source vs. proprietary models, with DeepSeek and Meta offering flexibility but potentially lacking the ecosystem support of OpenAI and Gemini. DeepSeek's cost-efficiency, with training costs as low as $6 million for V3 compared to OpenAI's $100 million for GPT-4, has sparked discussions on accessibility. Grok's real-time capabilities are a unique selling point, but pricing details remain unclear, affecting adoption.

As the AI landscape evolves, monitoring updates is crucial, especially with recent releases like DeepSeek R1 in January 2025 and Gemini 2.0 in early 2025, which continue to push boundaries.

Verdict on Which AI Model to Use

DeepSeek R1 and Qwen are strong for technical tasks, Gemini for large context applications, Grok for real-time information, and Meta Llama for cost-effective general use. Developers should balance performance, budget, and flexibility, keeping themselves up-to-date with rapidly changing AI field.

Get AI privacy without

compromise

Chat with Deepseek, Llama, Qwen, GLM, Mistral, and 30+ open-source models

Encrypted storage with client-side keys — conversations protected at rest

Shared context and memory across conversations

2 image generators (Stable Diffusion 3.5 Large & Qwen Image) included