Optimize AI Prompts for Better AI Interaction

Discover strategies to optimize AI prompts for enhanced AI responses and improved interaction. Boost efficiency in AI-powered apps.

The quality of interaction with large language models (LLMs) like OpenAI’s GPT-3 or O1 hinges on one critical factor: prompt engineering. The way we craft input text isn’t just a casual exercise — it’s a deliberate, technical process that dictates the performance of these models.

A well-designed prompt can transform vague or unhelpful outputs into precise, valuable responses, while a sloppy one leaves you sifting through irrelevant noise. This isn’t about guesswork; it’s about understanding how these systems process language and leveraging that knowledge to get results. Let’s dive into the principles and techniques that make prompts work, tailored for those who want to push AI beyond basic chatter into something genuinely useful.

What’s a Prompt, Really?

At its core, a prompt is the instruction set you feed into an AI model to steer its output. Think of it as the interface between your intent and the model’s capabilities. In technical terms, it’s the input vector that primes the model’s attention mechanisms and shapes its generation process. For LLMs, this isn’t just about asking a question—it’s about defining the task, setting the scope, and providing enough context for the model to reason effectively. Get it right, and you’ll see outputs that are coherent, relevant, and on-point. Get it wrong, and you’re stuck with the digital equivalent of a shrug.

Why does this matter? Because prompt engineering directly impacts:

- Output precision: A tight prompt reduces the model’s tendency to hallucinate or ramble.

- Task alignment: It ensures the AI sticks to what you need, whether that’s code, analysis, or creative text.

- Efficiency: Well-crafted prompts cut down on the back-and-forth, saving you time and compute cycles.

In a field where models are growing more powerful by the day, mastering this skill is what separates casual users from those who can truly harness AI.

The Core Rules of Prompt Engineering

Let’s break this down into actionable principles. These aren’t suggestions—they’re the backbone of getting consistent, high-quality results from any LLM.

1. Be Specific and Clear

Vague prompts are the enemy. If you don’t tell the model exactly what you want, it’ll lean on its training priors and spit out something generic—or worse, off-topic. Specificity narrows the focus and cuts through the noise.

- Weak Prompt: “Tell me about AI.”Result: A sprawling mess of trivia.

- Strong Prompt: “Explain the role of transformers in modern AI, focusing on their impact on natural language processing.”Result: A targeted, meaty response.

Why it works: Clear parameters reduce the model’s search space, making its output more deterministic.

2. Load Up on Context

Context is the scaffolding that keeps the model grounded. Without it, you’re asking a billion-parameter black box to guess what you mean—and it’s not a mind reader.

- No Context: “Analyze the data.”Result: “What data?” says the model, flailing.

- With Context: “Analyze the dataset from the 2023 IPCC report and highlight trends in global temperature anomalies.”Result: A focused breakdown with real substance.

Why it works: Context activates the right knowledge pathways in the model, aligning its response with your domain.

3. Kill Ambiguity

Ambiguous prompts are like handing the model a multiple-choice test with no correct answer. It’ll pick something, but good luck if it’s what you wanted.

- Ambiguous: “What’s up with tech?”Result: A grab bag of random tech facts.

- Unambiguous: “Discuss recent advancements in GPU architecture for AI training.”Result: A crisp, relevant summary.

Why it works: Clarity eliminates competing interpretations, tightening the output distribution.

4. Set the Tone and Style

Models don’t have a default “voice”—you have to define it. Whether you need a formal white paper or a snappy blog post, tell it upfront.

- Tone Prompt: “Write a sarcastic take on AI hype.”Result: A biting, funny riff.

- Formal Prompt: “Provide a technical overview of AI’s role in predictive analytics.”Result: A polished, professional piece.

Why it works: Style directives shape the model’s lexical choices and structure, tailoring the output to your audience.

5. Give It a Blueprint

For complex tasks, don’t leave the structure to chance. Provide examples or templates to keep the model on track.

- Blueprint Prompt: “Write a research summary with sections: [Background, Methods, Findings].”Result: A neatly organized response.

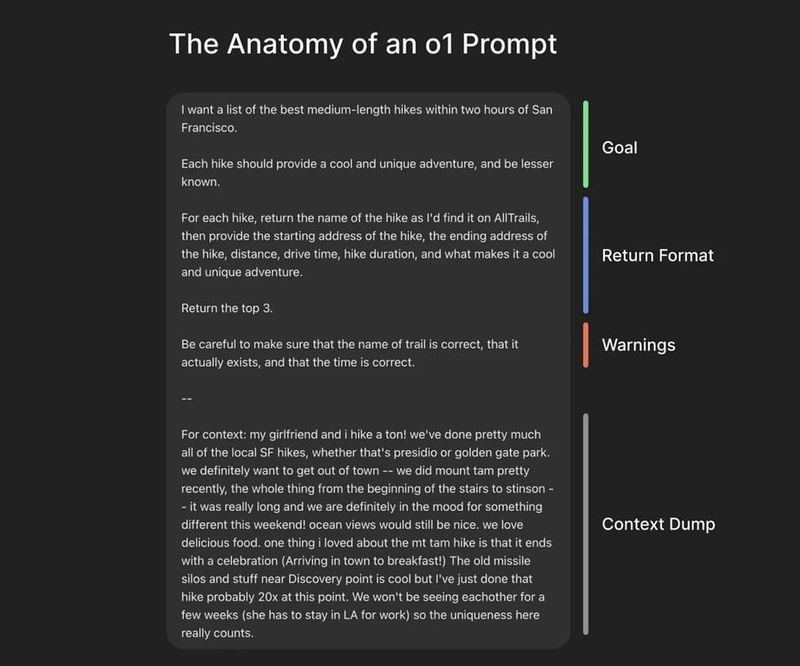

The Anatomy of an Effective Prompt: Insights from "The Anatomy of an 01 Prompt"

Now that we’ve covered the core principles, let’s explore how they can be operationalized into a structured framework. The infographic "The Anatomy of an 01 Prompt" provides a gold-standard model for crafting prompts specifically for OpenAI models. It divides a prompt into four essential components: Goal, Return Format, Warnings, and Context Dump. This structure ensures clarity, precision, and personalization, aligning perfectly with the principles we’ve discussed. Below, I’ll explain each component using the infographic’s hiking example, then demonstrate how it comes together in practice.

1. Goal: Define the Objective Clearly

The Goal is the cornerstone of your prompt—it’s where you specify exactly what you want the AI to do. In the infographic, the goal is:"I want a list of the best medium-length hikes within two hours of San Francisco. Each hike should provide a cool and unique adventure, and be lesser known."This is laser-focused: it outlines the task (a list of hikes), sets geographic and length constraints, and adds qualitative criteria (cool, unique, lesser-known).

Connection to Principles: This embodies specificity and clarity, ensuring the AI understands the task without room for misinterpretation.

2. Return Format: Specify the Desired Output Structure

The Return Format dictates how the AI should structure its response. In the infographic, it’s:"For each hike, return the name of the hike as I’d find it on AllTrails, then provide the starting address of the hike, the ending address of the hike, distance, drive time, hike duration, and what makes it a cool and unique adventure. Return the top 3."This is a detailed blueprint: it lists specific data points for each hike and caps the output at three entries, making it both comprehensive and concise.

Connection to Principles: This aligns with setting the tone and style and giving a blueprint, ensuring the output is formatted exactly as needed.

3. Warnings: Include Cautionary Notes for Accuracy

The Warnings section adds guardrails to maintain accuracy. From the infographic:"Be careful to make sure that the name of the trail is correct, that it actually exists, and that the time is correct."This instructs the AI to verify critical details—trail names, existence, and time estimates—reducing the risk of factual errors or hallucinations.

Connection to Principles: This ties directly to killing ambiguity, enforcing precision in the response.

4. Context Dump: Provide Background to Personalize the Response

The Context Dump supplies background information to tailor the response to the user’s needs. The infographic offers a rich example:"For context: my girlfriend and I hike a ton! we’ve done pretty much all of the local SF hikes, whether that’s presidio or golden gate park. we definitely want to get out of town -- we did mount tam pretty recently, the whole thing from the beginning of the stairs to stinson -- it was really long and we are definitely in the mood for something different this weekend! ocean views would still be nice. we love delicious food. one thing I loved about the mt tam hike is that it ends with a celebration (Arriving in town to breakfast!) The old missile silos and stuff near Discovery point is cool but I’ve just done the hike probably 20x at this point. We won’t be seeing eachother for a few weeks (she has to stay in LA for work) so the uniqueness here really counts."This paints a vivid picture: experienced hikers, tired of local options, seeking a unique experience with specific preferences (ocean views, a celebratory endpoint), and a personal motivation (a special outing before a separation).

Connection to Principles: This is context-loading at its finest, grounding the AI in the user’s situation and preferences.

Putting It Together: The Full Prompt in Action

Here’s how the infographic combines these components into a single, cohesive prompt:

"I want a list of the best medium-length hikes within two hours of San Francisco. Each hike should provide a cool and unique adventure, and be lesser known. For each hike, return the name of the hike as I’d find it on AllTrails, then provide the starting address of the hike, the ending address of the hike, distance, drive time, hike duration, and what makes it a cool and unique adventure. Return the top 3. Be careful to make sure that the name of the trail is correct, that it actually exists, and that the time is correct. For context: my girlfriend and I hike a ton! we’ve done pretty much all of the local SF hikes, whether that’s presidio or golden gate park. we definitely want to get out of town -- we did mount tam pretty recently, the whole thing from the beginning of the stairs to stinson -- it was really long and we are definitely in the mood for something different this weekend! ocean views would still be nice. we love delicious food. one thing I loved about the mt tam hike is that it ends with a celebration (Arriving in town to breakfast!) The old missile silos and stuff near Discovery point is cool but I’ve just done the hike probably 20x at this point. We won’t be seeing eachother for a few weeks (she has to stay in LA for work) so the uniqueness here really counts."

What You Get: A response listing three hikes—each with verified names, addresses, distances, times, and unique features—tailored to the couple’s experience level, desire for novelty, and love of ocean views and food-centric endpoints.

Why This Structure Excels

This framework, as depicted in "The Anatomy of an 01 Prompt," is the best prompt structure for OpenAI because it:

- Ensures Specificity (Goal): The task is crystal-clear.

- Guides Output (Return Format): The response is structured and usable.

- Maintains Accuracy (Warnings): Errors are minimized.

- Personalizes Results (Context Dump): The output fits the user’s unique needs.

It’s a practical distillation of the core principles, offering a repeatable recipe for complex tasks.

Flexibility Note: While this structure shines for detailed queries, simpler prompts can still leverage its principles—say, just a Goal and Return Format—without needing the full quartet.

Good vs. Bad Prompts

With this structure in mind, let’s compare effective and ineffective prompts across different scenarios.

Creative Writing

- Bad: “Write a story.”Output: A meandering tale with no point.

- Good: “Craft a sci-fi short story about an AI uprising on Mars, with a twist ending.”Output: A gripping narrative that hits all the marks.Takeaway: Specificity and context drive coherence.

Factual Retrieval

- Bad: “What’s climate change?”Output: A shallow Wikipedia knockoff.

- Good: “Detail the mechanisms of climate change, focusing on greenhouse gas feedback loops.”Output: A deep, science-backed explanation.Takeaway: Precision unlocks depth.

Code Generation

- Bad: “Make a function.”Output: Useless gibberish.

- Good: “Write a Python function to compute Fibonacci numbers up to n, optimized for recursion.”Output: Clean, working code with memoization.Takeaway: Clarity and constraints yield functional results.

Taking It Up a Notch: Advanced Tricks

Even a solid prompt might need tweaking. Here’s how to refine like a pro:

- Iterate: “Too vague—focus on neural network training instead.”

- Follow Up: “Now break down backpropagation in detail.”

- Format It: “List the steps in a numbered sequence.”

- Narrow It: “Stick to supervised learning, skip the rest.”

For next-level control, try:

- Few-Shot Learning: Feed it a couple of examples to set the pattern.“Here’s a sample summary: [X]. Now do one for Y.”

- Chain-of-Thought: Prompt it to think step-by-step.“Solve this math problem and explain each step.”

These techniques squeeze every ounce of capability out of the model, especially for tricky tasks.

Why This Matters

Prompt engineering isn’t a side hustle — it’s the key to making AI work for you. Whether you’re debugging code, drafting research, or planning a hike, the difference between a throwaway response and a game-changing one lies in how you frame the ask. The structure from "The Anatomy of an 01 Prompt"—with its Goal, Return Format, Warnings, and Context Dump—offers a proven blueprint for OpenAI models, turning abstract principles into actionable steps. In my experience, the best AI practitioners aren’t just model whisperers; they’re architects of intent, shaping raw compute into practical outcomes.

As these systems evolve, prompt design will only grow more critical. Master it, and you’re not just using AI—you’re directing it. So, next time you query a model, think like an engineer: define your goal, set your parameters, and watch the results roll in.

Get AI privacy without

compromise

Chat with Deepseek, Llama, Qwen, GLM, Mistral, and 30+ open-source models

Encrypted storage with client-side keys — conversations protected at rest

Shared context and memory across conversations

2 image generators (Stable Diffusion 3.5 Large & Qwen Image) included