What is Qwen AI by Alibaba? And how to use it?

Guide on what is Qwen, Qwen AI architecture, multilingual coding capabilities, and how to use Qwen AI privately on Okara.

When we talk about the biggest models in AI, names like ChatGPT, Gemini and Claude usually dominate the conversation. But there is a powerful AI Model rising from the East that deserves your attention. It's called Qwen.

For developers, data scientists, and enterprise leaders, the question "What is Qwen?" is becoming increasingly popular. It represents more than just another large language model (LLM); it is a sophisticated ecosystem of models developed by Alibaba Cloud that pushes the boundaries of multilingual proficiency, long-context processing, and complex reasoning.

Qwen, or Tongyi Qianwen, distinguishes itself not merely through raw parameter counts but through specialized architectural optimizations that make it exceptionally capable in coding, mathematics, and cross-cultural linguistic tasks. This guide provides a technical deep dive into the architecture, capabilities, and practical applications of Qwen AI, offering a roadmap for leveraging this powerful tool in your workflows.

What is Qwen? The Architecture of Alibaba’s AI Powerhouse

At a fundamental level, what is Qwen? It is a family of large language models based on the Transformer architecture, designed to handle a diverse array of tasks ranging from creative writing to complex software engineering.

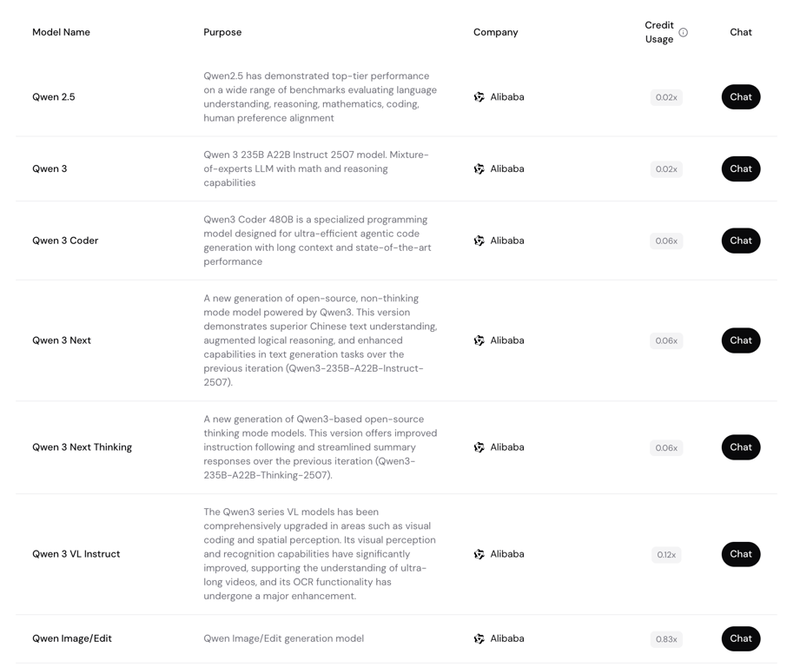

Developed by Alibaba Cloud, the Qwen series includes several variants tailored for different computational needs and use cases:

- Qwen-Max: The flagship proprietary model, boasting trillions of parameters (though exact counts remain undisclosed), designed to rival GPT-4 and Claude 3 Opus in reasoning and general intelligence.

- Qwen-Plus: A balanced model offering a blend of high performance and cost-efficiency, suitable for enterprise applications.

- Qwen-Turbo: Optimized for speed and low latency, ideal for real-time applications like chatbots.

- Qwen-7B, 14B, 72B (Open Source): These are the open-weights versions that have taken the developer community by storm, consistently topping benchmarks like Hugging Face's Open LLM Leaderboard.

The Technical Edge: Why Qwen is Different

Qwen is not simply a clone of existing Western models. Its training methodology incorporates a massive, diverse dataset that includes a significant proportion of Chinese and multilingual data, alongside English. This dual-dominant training corpus allows Qwen to bridge the semantic gap between East and West more effectively than models trained predominantly on English-centric data.

Furthermore, Qwen utilizes advanced alignment techniques such as Reinforcement Learning from Human Feedback (RLHF) to ensure safety and helpfulness, while its specialized variants, like Qwen-Coder and Qwen-Math, are fine-tuned on domain-specific datasets to achieve state-of-the-art (SOTA) performance in technical domains.

Features and Capabilities: A Technical Breakdown

To truly understand what is Qwen, one must look under the hood at its specific capabilities. It offers a suite of features that cater specifically to technical users and enterprise needs.

1. Multilingual Mastery and Cross-Cultural Semantics

Most LLMs exhibit a "resource bias," performing exceptionally well in English but degrading in quality for other languages. Qwen breaks this mold. It supports over 29 languages, but its proficiency in Asian languages is particularly noteworthy.

- Semantic Nuance: It captures cultural idioms and context in Chinese, Japanese, and Korean that often escape Western models.

- Translation Accuracy: For technical translation, such as translating software documentation or legal contracts between Mandarin and English, Qwen demonstrates superior fidelity, preserving technical accuracy alongside linguistic flow.

2. Massive Context Window Processing

In the era of Retrieval-Augmented Generation (RAG) and long-document analysis, context window size is a critical metric. Qwen supports context windows of up to 128,000 tokens (with some variants experimenting with even larger capacities).

- Practical Implication: This allows users to feed the model entire technical manuals, long code repositories, or lengthy financial reports in a single prompt.

- Recall Accuracy: Unlike models that suffer from "lost in the middle" phenomena (where information in the middle of a long prompt is ignored), Qwen maintains high recall accuracy across its context window, making it reliable for data extraction tasks.

3. Advanced Coding Capabilities (CodeQwen)

Qwen’s coding capabilities are among its strongest selling points. The specialized CodeQwen models are pre-trained on a massive corpus of code across languages like Python, Java, C++, and Go.

- Code Generation: It can generate boilerplate code, complex algorithms, and even entire functional modules based on natural language descriptions.

- Debugging and Refactoring: Developers can paste erroneous code snippets, and Qwen will not only identify the bug but also suggest optimized refactoring strategies to improve time complexity or readability.

- SQL Generation: It excels at converting natural language queries into complex SQL statements, streamlining database interactions for non-technical users.

4. Mathematical and Logical Reasoning

Qwen shows robust capabilities in Chain-of-Thought (CoT) reasoning. When presented with complex mathematical problems or logic puzzles, it doesn't just guess the answer; it breaks the problem down into intermediate steps. This architectural focus on reasoning makes it highly effective for:

- Data Analysis: Interpreting complex datasets and deriving insights.

- Algorithmic Logic: Solving competitive programming problems or optimizing logical workflows.

How to Use Qwen AI: A Step-by-Step Guide

Leveraging Qwen requires different approaches depending on whether you are a casual user, a developer, or an enterprise architect.

Option 1: Get Access to All Qwen AI Models on Okara

Okara hosts 30+ open-source AI models. Here you can get access to all Qwen models under one subscription.

While the capabilities of Qwen are impressive, utilizing public cloud endpoints includes data privacy risks, particularly for enterprises handling proprietary code or sensitive customer data.

This is where Okara provides a critical infrastructure layer. Okara is a private AI workspace with privately hosted open-source models. It supports the entire Qwen family, allowing you to leverage Alibaba's technology without exposing your data to the public cloud.

The Okara Advantage for Qwen Users

- Data Sovereignty: On Okara, your interaction data is encrypted. The platform adheres to a strict "zero-training" policy, meaning your prompts and Qwen's outputs are never harvested to train future model iterations.

- Unified Workspace: Okara runs Qwen alongside other top-tier models like Llama 3 or Mistral. This facilitates easy A/B testing and you can prompt Qwen and Llama with the same coding task and compare the outputs instantly.

- Enterprise-Grade Security: With features like user-controlled decryption keys and isolated environments, Okara transforms Qwen from a public tool into a secure enterprise asset.

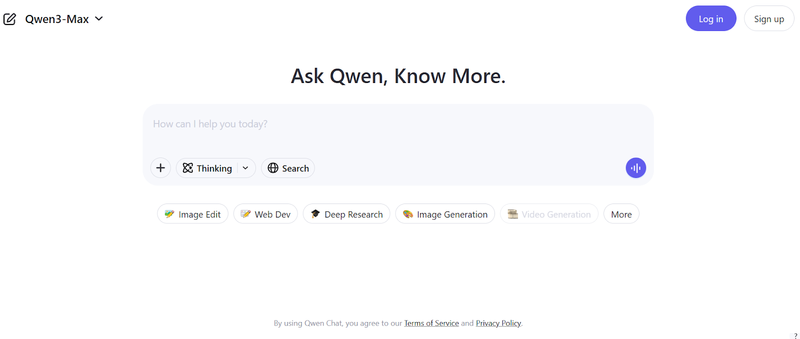

Option 2: The Web Interface (Tongyi Qianwen)

For immediate access without coding, Alibaba provides a web-based chat interface similar to ChatGPT.

- Access: Navigate to the official Tongyi Qianwen website (tongyi.aliyun.com).

- Registration: You will typically need an Alibaba Cloud account or a supported mobile number for verification.

- Interface Navigation:Standard Chat: Use this for general queries, writing assistance, and translation.Document Analysis: Upload PDFs or Word documents directly to the chat. The model parses the text and allows you to query the document content ("Summarize the key findings on page 12").Image Generation: Use the multimodal capabilities to describe an image and have Qwen generate it.

Option 3: API Integration for Developers

For building applications, you will want to use the Alibaba Cloud Model Studio API.

- Obtain API Key: Log in to the Alibaba Cloud console and navigate to the Model Studio section to generate an API key.

- SDK Installation: Install the Python SDK (e.g., pip install dashscope).

- Basic Call Structure:from http import HTTPStatusimport dashscopedashscope.api_key = 'YOUR_API_KEY'response = dashscope.Generation.call( model='qwen-max', messages=[{'role': 'user', 'content': 'Write a Python script for a binary search tree.'}], result_format='message',)if response.status_code == HTTPStatus.OK: print(response.output.choices[0]['message']['content'])else: print(f'Request failed: {response.output}')

- Fine-Tuning: The platform supports fine-tuning custom models using your own datasets, allowing you to tailor Qwen’s responses to your specific industry jargon or data formats.

Option 4: Local Deployment (Open Source)

For complete control, you can run the open-weights versions (like Qwen-14B-Chat) locally using tools like llama.cpp or Hugging Face transformers.

- Hardware Requirements: A 14B parameter model generally requires a GPU with at least 16GB-24GB of VRAM for decent performance.

- Setup: Clone the repository from Hugging Face, set up your Python environment, and load the model weights to run inference offline.

Comparison: Qwen vs. ChatGPT, DeepSeek, Llama

To understand Qwen's market position, we must compare it technically against the incumbents.

Qwen-Max vs. GPT-4 (OpenAI)

- Coding: In benchmarks like HumanEval and MBPP (Mostly Basic Python Problems), Qwen-Max consistently scores within striking distance of GPT-4, and often outperforms GPT-3.5 Turbo significantly. It is particularly adept at generating code comments and documentation.

- Language Support: While GPT-4 is excellent at translation, Qwen demonstrates superior performance in Chinese-English and English-Chinese tasks, capturing subtleties in idioms and professional terminology that GPT-4 sometimes misses.

- Availability: GPT-4 is purely proprietary. Qwen offers an open-source path (via its 72B model) that allows for on-premise deployment, a flexibility OpenAI does not offer.

Qwen vs. DeepSeek AI

- Focus Areas: DeepSeek is another Chinese heavyweight, often celebrated for its "DeepSeek Coder" models. While DeepSeek is highly specialized for coding and math, Qwen tends to be a stronger "generalist."

- Multimodality: Qwen-VL (Vision Language) models are generally considered more mature than DeepSeek's current multimodal offerings, making Qwen a better choice for tasks involving image analysis and text extraction.

- Context Window: Qwen generally offers larger stable context windows across its model family compared to DeepSeek's standard offerings.

Qwen vs. Llama 3 (Meta)

- Open Source Utility: Llama 3 is the standard for Western open-source models. However, for users in the APAC region or those dealing with multilingual datasets, Qwen-72B often outperforms Llama 3-70B due to its more diverse training data.

Conclusion

Qwen AI by Alibaba is a groundbreaking addition to the world of artificial intelligence, offering unparalleled capabilities in multilingual processing, long-context understanding, and advanced coding. Its versatility makes it a valuable tool for developers, researchers, and enterprises alike.

With secure deployment options like Okara, Qwen ensures that users can harness its power without compromising data privacy. Whether you're solving complex problems, analyzing large datasets, or building innovative applications, Qwen stands out as a reliable and forward-thinking AI solution. As the AI landscape continues to evolve, Qwen will play a pivotal role in shaping the future of intelligent systems.

FAQs

- What is Qwen used for?Qwen is designed for a range of tasks including programming assistance, multilingual translation, text generation, document summarization, data analysis, and multimodal applications involving images and audio. Its architecture supports both technical and creative workflows across diverse industries.

- What does Qwen stand for?Qwen stands for "Tongyi Qianwen" in Chinese, which means "Seeking Answers from a Thousand Questions." The name reflects Alibaba’s vision for a model capable of answering a broad spectrum of real-world queries.

- Is Qwen better than DeepSeek?Qwen and DeepSeek both have strong capabilities. Qwen tends to be more versatile, especially in multilingual and general AI tasks, while DeepSeek is recognized for its focused performance on mathematical and coding problems. The best choice depends on specific use cases and deployment needs.

- Is Qwen safe to use?Qwen employs advanced alignment techniques like RLHF for safety, and safeguards are in place on the public cloud to limit misuse. For complete privacy and data protection, use Qwen on secure platforms like Okara or deploy open-source models locally.

- Is Qwen AI fully free?Many Qwen models are open-source and free to use for personal and research purposes. However, commercial or large-scale API access, as well as some enhanced models, may carry usage costs or require a paid license.

- What is the difference between Qwen-Chat and Qwen-Base?Qwen-Base is the raw pre-trained model. It is like a text-completion engine; if you give it a question, it might generate another question rather than answering it.Qwen-Chat has undergone fine-tuning and RLHF to follow instructions. This is the version you want for a chatbot experience or for following specific commands.

- How does Qwen handle data privacy?If you use the public free version of Qwen, your data may be used to improve the service. For strict privacy, you should use the enterprise API with data privacy agreements, run the open-source model locally, or use a secure platform like Okara.

- Does Qwen support function calling?Yes, Qwen models are trained to support function calling (also known as tool use). This means developers can describe external tools (like a calculator or a weather API) to Qwen, and the model can intelligently output structured JSON to call those tools to answer a user's query.

Get AI privacy without

compromise

Chat with Deepseek, Llama, Qwen, GLM, Mistral, and 30+ open-source models

Encrypted storage with client-side keys — conversations protected at rest

Shared context and memory across conversations

2 image generators (Stable Diffusion 3.5 Large & Qwen Image) included